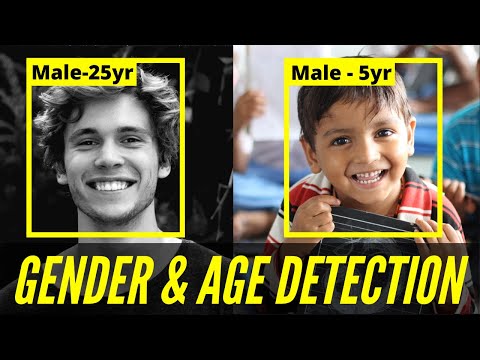

Here at PixLab, we recently deployed on production, a brand new gender/age classification model available to our customers via the FACEMOTION API endpoint.

The new model implementation is based on the ResNet-50 convolutional neural network (CNN) that is 50 layers deep. The network can easily classify images into 1000 object categories, such as keyboard, mouse, pencil, and many animals.

The reference, implementation paper is from: Jiankang Deng, Jia Guo, Niannan Xue, Stefanos Zafeiriou: Additive angular margin loss for deep face recognition. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (2019 (https://arxiv.org/abs/1801.07698).

The Python/PHP code samples listed below should be able to easily output the age estimation, gender, and emotion pattern by just looking at the facial shape of any present human face in a given picture or video frame using our new classifcation model.

Python Code

- The PHP usage sample can be viewed here or on the PixLab Github repository.

- FACEMOTION is the sole endpoint needed to perform such a task. It should output the rectangle coordinates for each detected human face that you can pass verbatim if desired to other processing endpoints like CROP or MOGRIFY plus the age estimation, gender and emotion pattern of the target face based on its facial shape.

- Finally, all of our production ready, code samples are available to consult at our samples page or the PixLab Gihtub repository.