Seamless PDF automation with Vision AI, OCR, and Document Intelligence

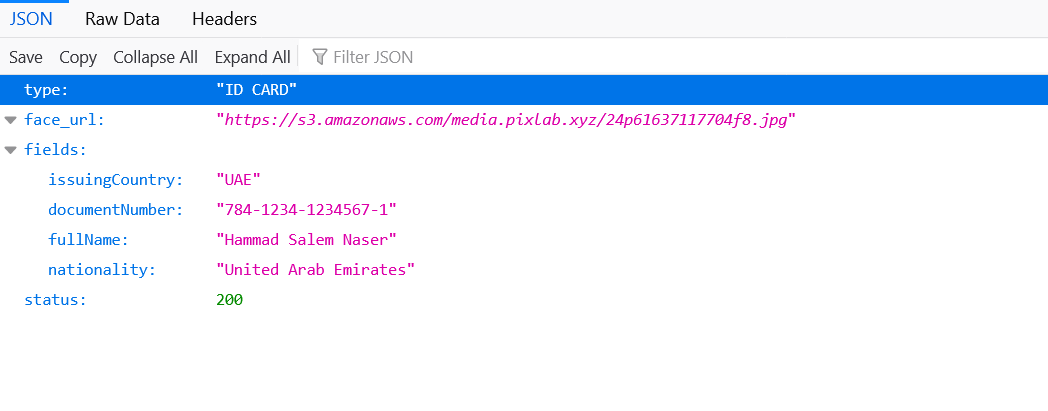

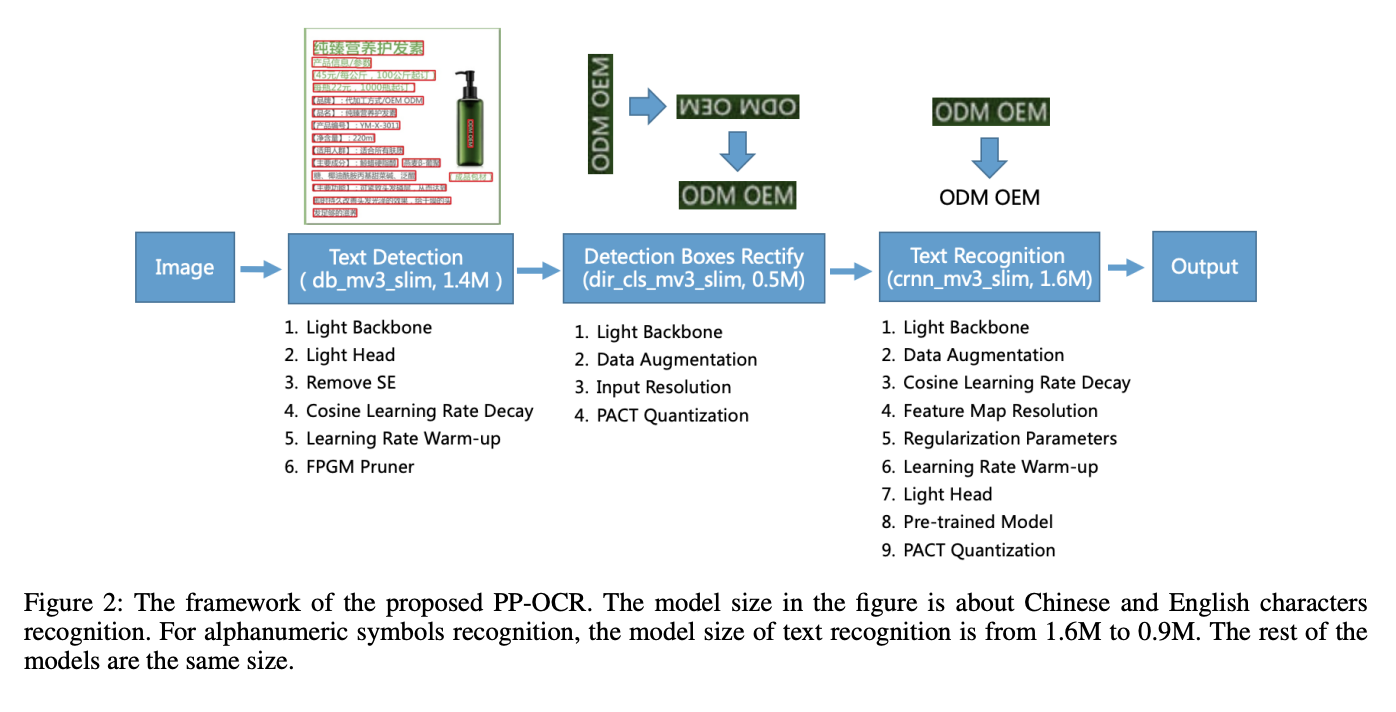

We’re excited to announce the release of three powerful new PixLab PDF API endpoints, designed to make PDF workflows smarter, faster, and fully automated. With these additions, developers can now convert PDFs to images, extract text with OCR, scan official ID documents, and programmatically generate PDF documents with ease.

Whether you're building fintech onboarding flows, automated invoice systems, document processing pipelines, or business reporting tools, PixLab’s new PDF APIs are built for you.

✅ pdftoimg — Convert PDFs to Images

Convert every page inside a PDF into high-resolution images for preview, analysis, or AI-based processing.

Perfect for:

- Document previews & thumbnails

- Feeding pages into Vision LLMs

- AI-based document analysis pipelines

👉 Documentation → https://pixlab.io/endpoints/pdftoimg

✅ pdfscan — PDF Text Extraction & OCR

Transform scanned or image-based PDFs into searchable, machine-readable text using PixLab’s OCR engine.

Use Cases:

- Invoice and receipt automation

- Document processing

- Enterprise data extraction integrations

👉 Documentation → https://pixlab.io/endpoints/ocr

✅ genpdf — Generate PDF Files Programmatically

Create clean, branded PDF documents from HTML, data payloads, or structured templates.

Ideal for:

- Invoice generation

- Digital certificates & reports

- Legal and business form creation

- Workflow automation & SaaS platforms

👉 Documentation → https://pixlab.io/endpoints/pdfgen

Built for Modern Document Automation

These Vision APIs are designed to support end-to-end intelligent document pipelines:

- ✅ Convert → Extract → Analyze → Export

- ✅ Multi-language OCR + Vision AI

- ✅ Works with spreadsheets, scanned forms, images, & office docs

- ✅ Simple API integration across all programming languages

Start Building with PixLab

These endpoints are now live and available to all PixLab users.

👉 Browse all PDF-related endpoints:

https://pixlab.io/api-endpoints

🔐 Get your API key:

https://console.pixlab.io

What’s Coming Next

- PDF annotation API suite

- Merge / split PDF tools

- Structured invoice extraction templates

- Vision-powered business form understanding

Document Intelligence for the AI Era

At PixLab, our mission is simple:

Deliver world-class Vision AI and Document Intelligence tools to every developer.

Got feedback or need help getting started?

💬 https://pixlab.io/support

— The PixLab Vision Team