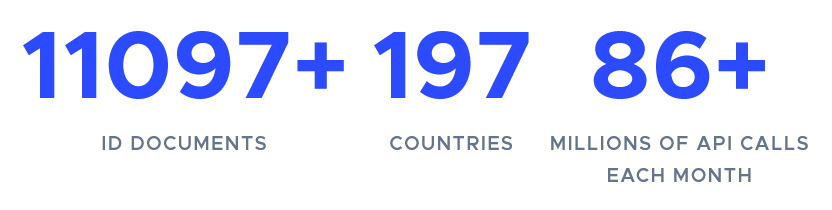

Liveness detection is a critical component in biometric security systems, ensuring that the face being scanned is from a live person rather than a photograph, video, or mask. FACEIO, a leader in facial authentication technology, addresses these challenges with its advanced AI models. This blog post explores how FACEIO resolves liveness detection issues, leveraging sophisticated algorithms and security practices.

What is Liveness Detection?

Liveness detection is a security feature used in facial recognition systems to verify that the scanned face is from a live individual. It prevents spoofing attacks by detecting natural facial movements and responses. Various techniques employed in liveness detection include:

- Motion Analysis: Detects natural movements such as blinking and head movements.

- Texture Analysis: Differentiates between the texture of live skin and that of a photograph or screen.

- Challenge-Response: Prompts the user to perform actions like blinking or smiling to prove liveness.

Implementing Liveness Detection with FACEIO

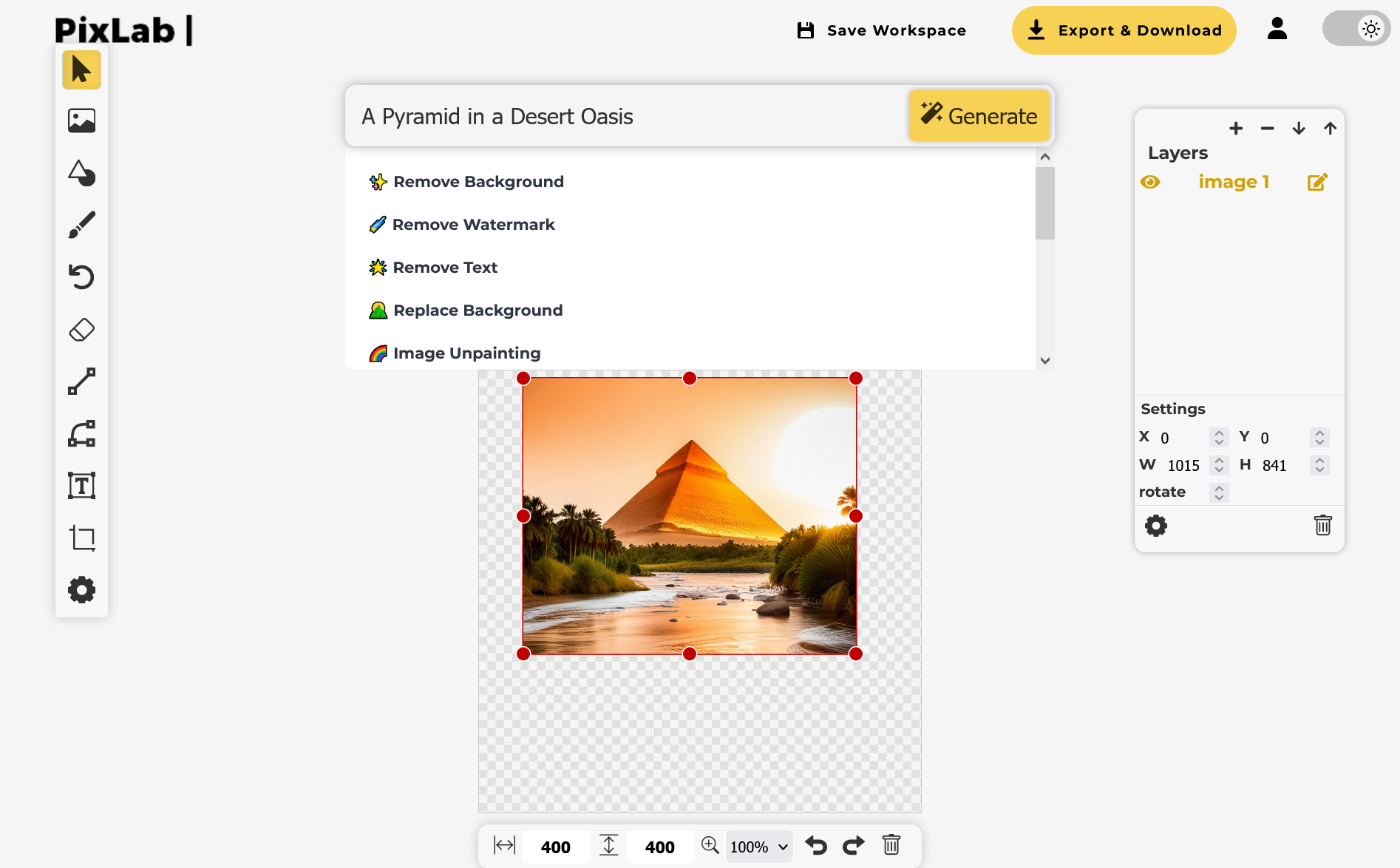

FACEIO provides an easy-to-integrate solution for facial authentication with built-in liveness detection. Here is a step-by-step guide to activate liveness detection on your FACEIO Application:

Setting Up FACEIO:

- Sign up and create an account on the FACEIO Console.

Integrating FACEIO SDK:

- Add the fio.js JavaScript SDK to your project by creating an application first on the FACEIO Console, and follow the integration guide using your favorite JavaScript framework whether it is React, Next, Vue, Svelte or React-Native.

Initializing FACEIO:

- Initialize a new fio.js object instance in your JavaScript code with your Application Public ID as follows:

javascript const faceio = new faceIO("your-application-pub-id");

- Initialize a new fio.js object instance in your JavaScript code with your Application Public ID as follows:

Implementing Liveness Detection:

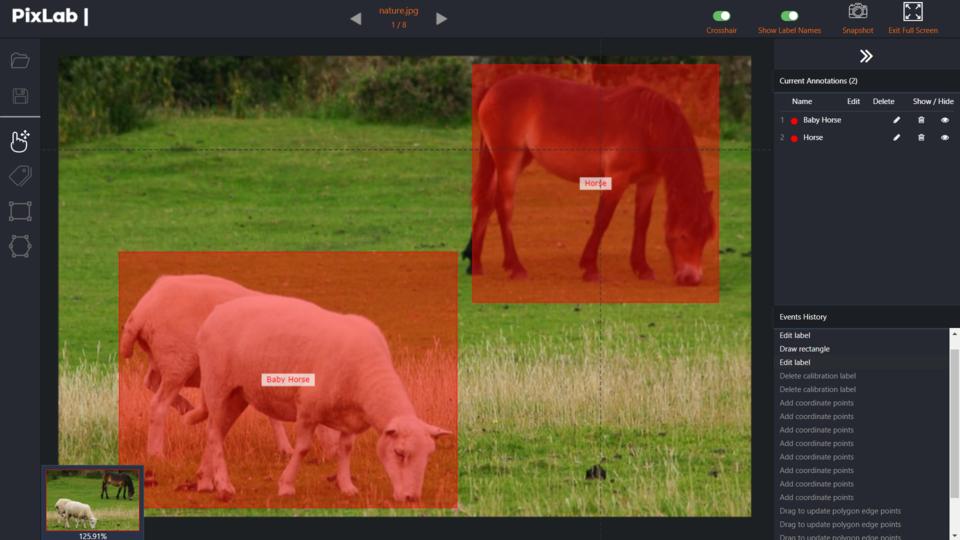

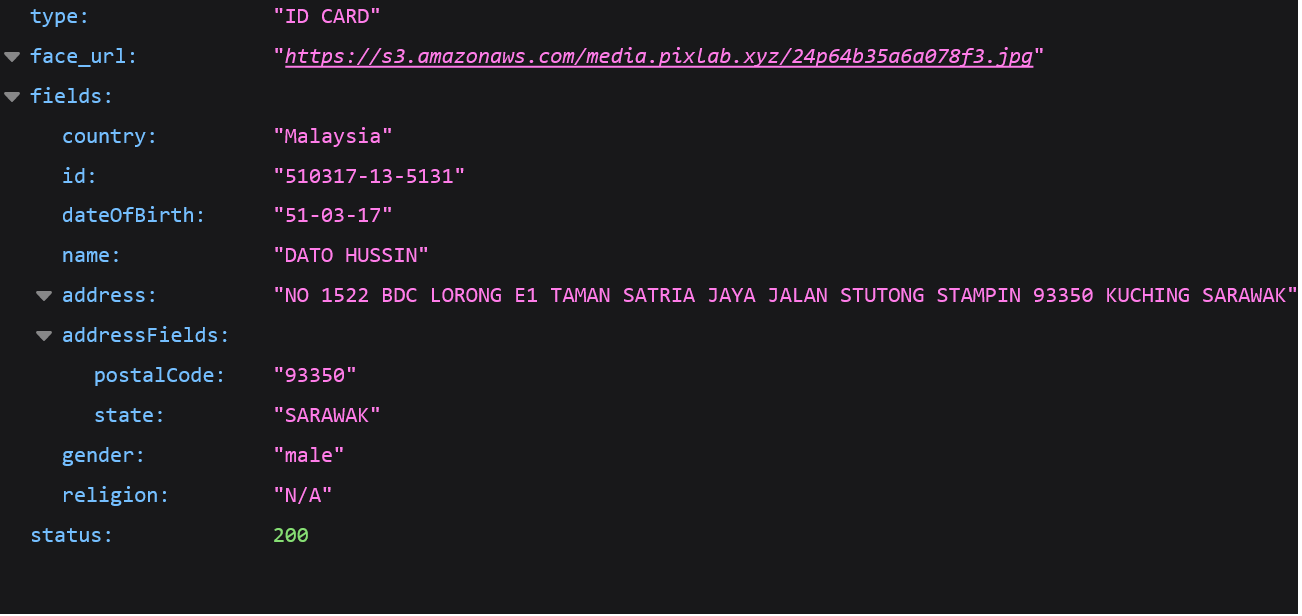

Activate Face Anti-Spoofing first by connecting to the FACEIO Console, then Navigate to the SECURITY tab from the manager main view. Once the target application selected. Activate the Protect Against Deep-Fakes & Face Spoof Attempts security option as shown below:

async function performLivenessCheck() { try { const response = await faceio.authenticate({ action: "liveness-check" }); if (response.livenessScore > 0.9) { console.log("User authenticated successfully"); } else { console.warn("Liveness check failed"); } } catch (error) { console.error("Liveness check failed", error); } }

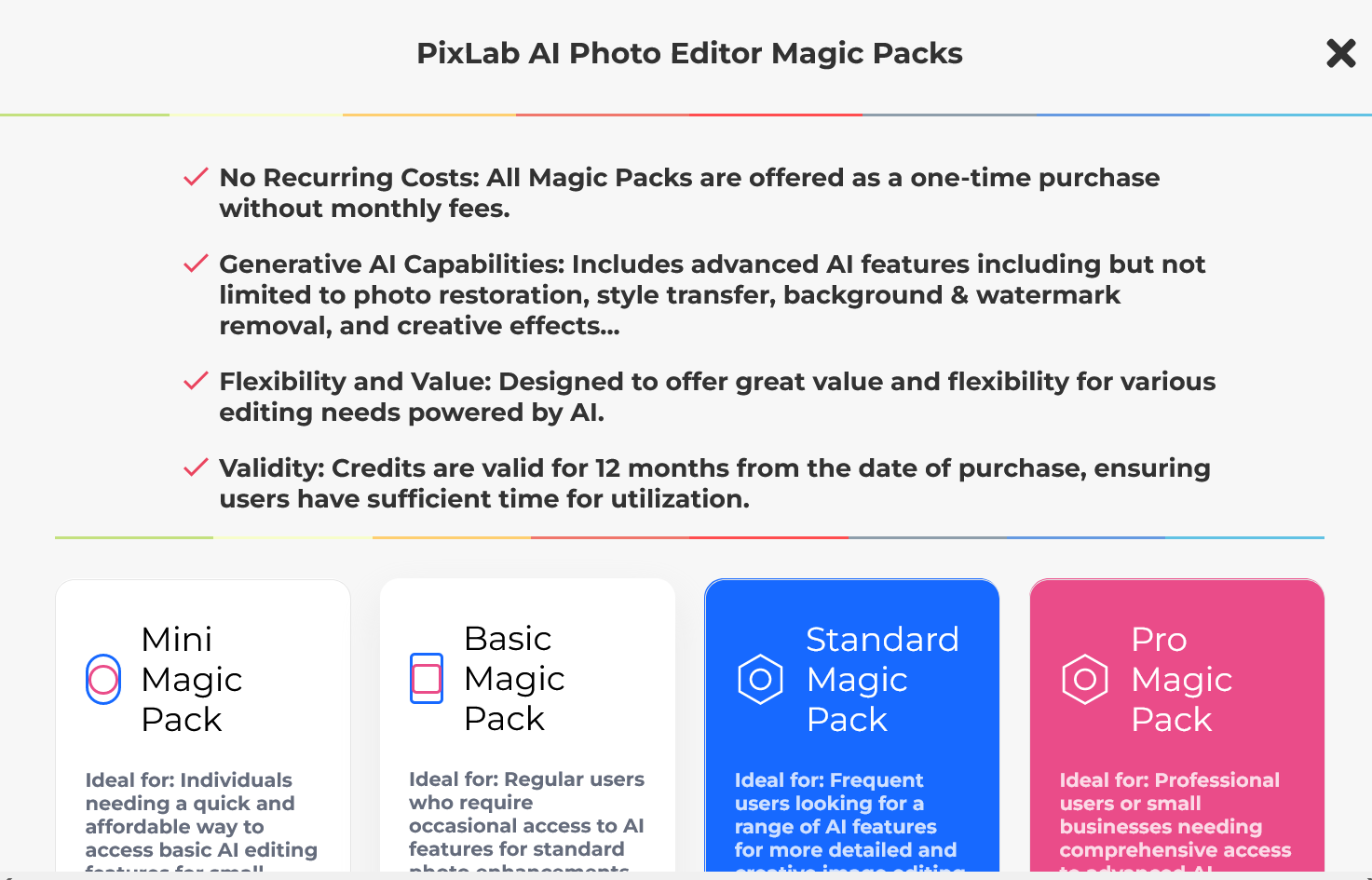

Advanced Security Features of FACEIO

FACEIO's commitment to security extends beyond basic liveness detection. Here are some of the advanced features that enhance its security:

- Multifactor Authentication (MFA): Combine facial recognition with other authentication methods for added security.

- Customizable User Prompts: Tailor instructions and prompts shown to users during the liveness check.

- Analytics and Reporting: Access detailed reports on authentication attempts to monitor and improve system performance.

Best Practices for Secure Implementation

FACEIO provides several security best practices to ensure robust protection against spoofing and other security threats:

- Reject Weak PIN Codes: Ensure users create strong PIN codes during enrollment.

- Prevent Duplicate Enrollment: Avoid multiple enrollments by the same user.

- Protect Against Deepfakes: Use liveness detection to counteract spoofing attempts.

- Forbid Minors: Prevent minors from enrolling in the application.

- Always Ask for PIN Code: Require PIN code confirmation during authentication for added security.

- Enforce PIN Code Uniqueness: Ensure each user's PIN code is unique.

- Ignore Obscured Faces: Reject partially masked or poorly lit faces.

- Reject Missing HTTP Headers: Prevent requests without proper origin or referer headers.

- Restrict Domain and Country: Limit widget instantiation to specific domains and countries.

- Enable Webhooks: Use webhooks for real-time updates on user interactions.

Conclusion

Integrating FACEIO for liveness detection and facial authentication in JavaScript significantly enhances digital security. Its robust API and user-friendly JavaScript library make it easy for developers to implement biometric authentication, preventing spoofing and unauthorized access. FACEIO's advanced features and best practices ensure both security and user experience are prioritized, making it a valuable addition to any web application's defense against modern threats.

For more information, visit the FACEIO website.

Published in: Level Up Coding